Digital Citizen Corner

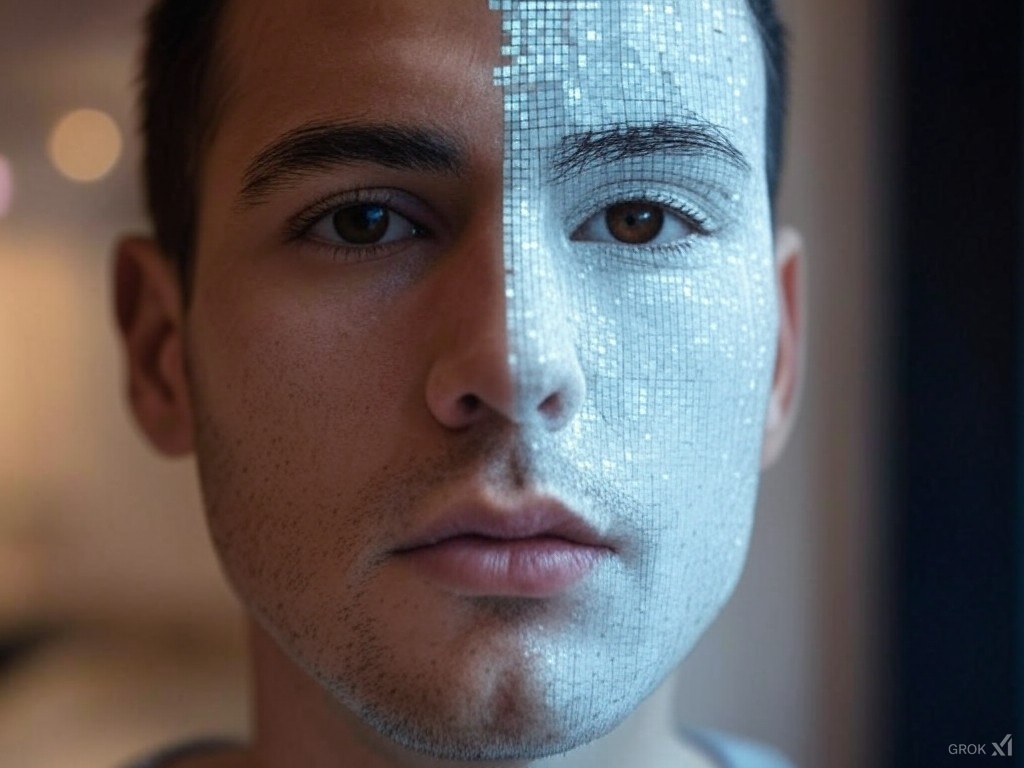

Trapped in a Digital Illusion – The Deep-Fake Deception

A Mother’s Worst Nightmare

Jennifer was driving home when her phone rang. It was an unknown number, but as she answered, her world shattered. On the other end, she heard her 15-year-old daughter sobbing, her voice trembling with fear.

“Mom, please help me! They have me—”

Then a man’s voice cut in, demanding ransom. Jennifer’s heart pounded. It was her daughter’s voice—every inflection, every nuance—begging for help. She was about to comply when she remembered something: her daughter was at a ski practice with no phone. She called her husband, and within minutes, the terrifying truth unraveled. It was a scam—an AI-generated deepfake of her daughter’s voice, designed to manipulate her into sending money.

Jennifer wasn’t the only victim. Across the world, AI-generated deep-fakes are being used to scam, deceive, and manipulate. And the scariest part? They only need a few seconds of your voice to make it happen.

The Rise of Digital Deception

Deepfake technology isn’t just a sci-fi concept—it’s here, and it’s evolving rapidly. Originally developed for entertainment and artificial intelligence research, deep-fakes now serve a darker purpose. Criminals use AI to clone voices, create fake videos, and impersonate people with terrifying accuracy.

Take the case of 23andMe, where hackers accessed sensitive genetic data. While the breach wasn’t directly related to deep-fakes, it highlights how personal data—voice recordings, DNA information, or facial images—can fall into the wrong hands. Imagine cybercriminals using genetic data to craft hyper-realistic digital impersonations, mimicking not just voices but entire identities.

In Jennifer’s case, the scammers didn’t need advanced hacking skills. They likely pulled her daughter’s voice from social media videos or an old voicemail. AI did the rest. The result? A fake emergency so convincing that any parent would panic.

The Threat is Real—And Closer Than You Think

The scariest part of deep-fake scams is how easily they can target anyone. If you’ve ever recorded a voice note, posted a video, or had a phone call intercepted, you could be at risk.

Here’s how scammers operate:

- Gathering Voice Samples – They extract audio from social media, voicemail recordings, or even short clips from online content.

- AI-Driven Voice Cloning – With just a few seconds of speech, AI can generate an exact replica of your voice.

- Creating a Fake Scenario – Scammers use emotional manipulation—fake kidnappings, emergencies, or distress calls—to pressure victims into sending money or sensitive information.

It’s not just phone calls. Some deep-fakes create hyper-realistic videos, making people appear to say things they never did. Imagine a fake video of you signing a document or approving a transaction—how do you prove it wasn’t real?

How Can You Protect Yourself?

With deepf-akes becoming more sophisticated, staying vigilant is the best defense. Here’s how you can protect yourself:

- Verify Before You Act – If you get a distress call from a loved one, hang up and call them back directly. If they don’t answer, reach out to someone who can confirm their safety. Creating a family “safe word” can also help verify identity in emergencies.

- Limit Personal Data Exposure – Avoid posting voice recordings, biometric data, or sensitive videos online. Even a short clip on social media can be enough for AI to replicate your voice.

- Stay Informed – Cybercriminals evolve their tactics daily. Educate yourself on the latest scams, and encourage friends and family to do the same.

- Use Multi-Factor Authentication (MFA) – Protect your online accounts with MFA to prevent unauthorized access, especially in cases where your voice or face could be used for identity verification.

- Be Skeptical of Unusual Requests – If someone claims to be in trouble and urgently needs money, take a step back and assess the situation. Scammers thrive on panic and quick decisions.

The New Age of Digital Mistrust

Jennifer’s story is a warning to us all. In a world where artificial intelligence can mimic our voices and faces with terrifying precision, skepticism is no longer paranoia—it’s a necessity.

The next time you hear a familiar voice begging for help, don’t let fear take over. Pause, verify, and think critically. Because in the age of deepf-akes, what seems real might be nothing more than a digital illusion.

This article was written by Bryan Kaye Senfuma, Digital Rights Advocate, Digital Security Subject Matter Expert, Photographer, Writer and Community Advocate. You can email Bryan at: bryantravolla@gmail.com